Qt3d + Oculus SDK

-

Hello,

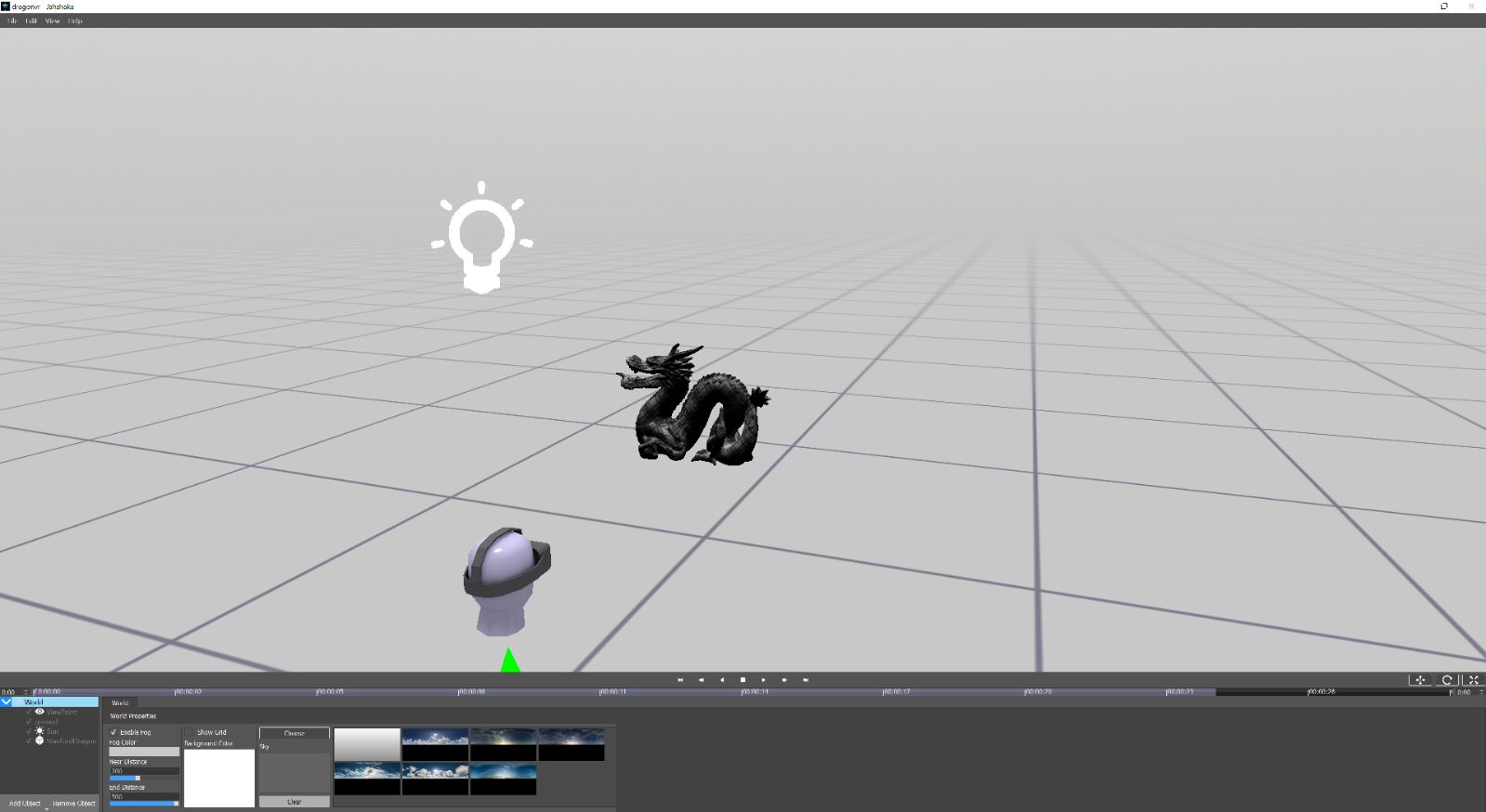

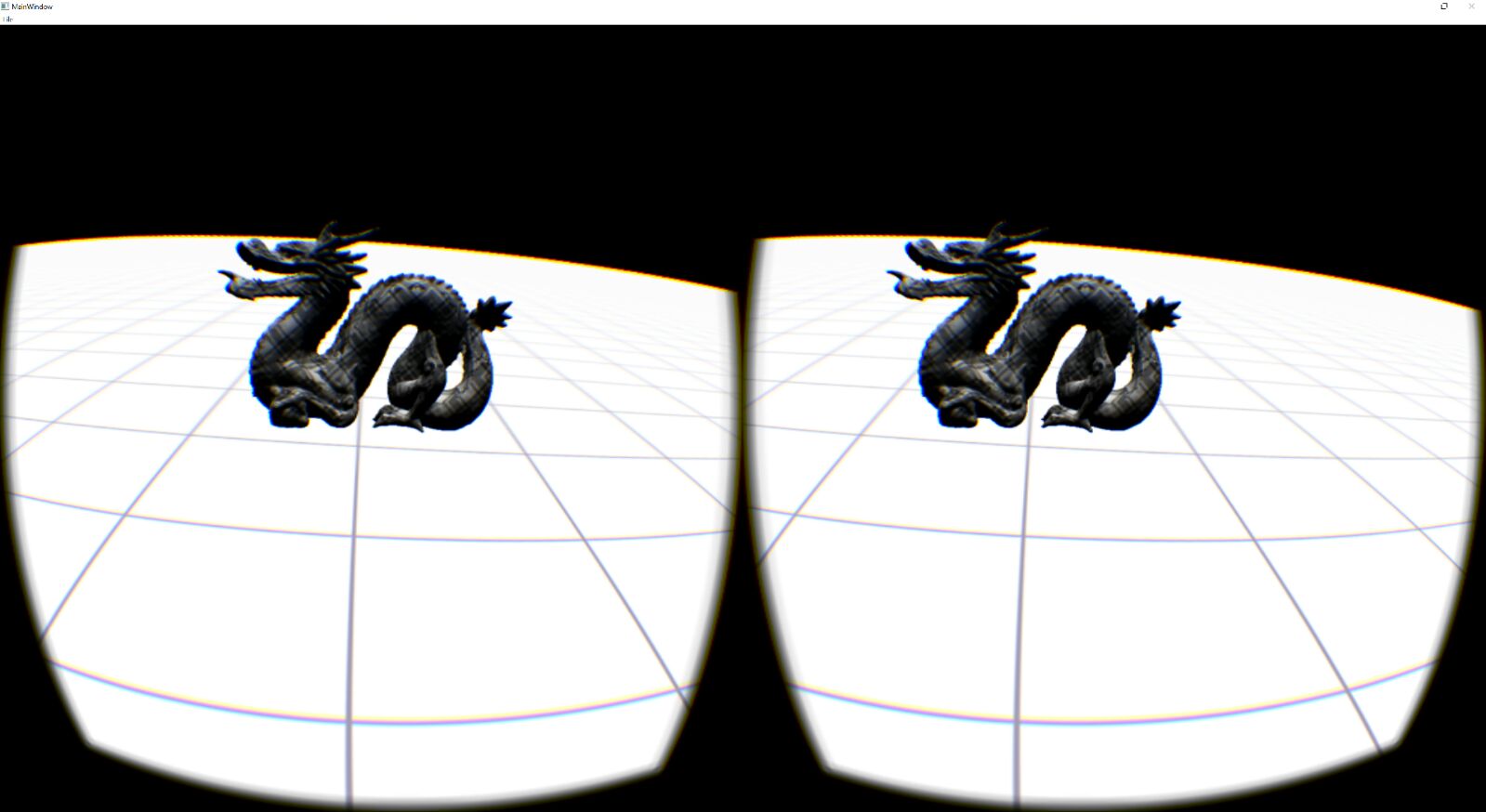

I am the new project manager working on the new version of Jahshaka which should support VR. Qt3D was chosen to be its new render system,

Given qt3d's structure, is it possible to integrate the oculus sdk with a qt3d application? I want to be able to integrate the oculus sdk with qt3d but my two main obstacles are:

- I cant use the textures from the texture swap chain created by the oculus sdk as a render target attachment

- I am not able to call ovr_SubmitFrame at the end of each frame since qt3d doesnt have a signal that would allow me to do so. I have tried to use a RenderAspect in synchronous mode so i could take control of the rendering loop myself, but it only causes the application to crash unexpectedly.

Has anyone successfully gotten them to work together? If so, how did you overcome these issues?

Also, are there any plans for built-in support of VR SDKs in qt3d in further releases?

-

I suggest you ask this question on Qt Interest Mailing List. Sean, who is the main brain behind Qt3D is active there and you are more likely to get a reply.

-

Its very frustrating but it seems the only way to get any help with Qt3d is to pay KDAB for a support contract... is no one out there working on VR with Qt3d ??

Qt3d seems like it has so much promise but if the guys behind it are selling support and wont help then how can we help to make it better or share what we are doing with others ourselves?

We really are stuck with our Oculus SDK implementation and would be really greatful if anyone can help us to get it working... especially since we are OSS as well here...

-

Its very frustrating but it seems the only way to get any help with Qt3d is to pay KDAB for a support contract... is no one out there working on VR with Qt3d ??

Qt3d seems like it has so much promise but if the guys behind it are selling support and wont help then how can we help to make it better or share what we are doing with others ourselves?

We really are stuck with our Oculus SDK implementation and would be really greatful if anyone can help us to get it working... especially since we are OSS as well here...

@jahshaka Hi, and welcome to the Qt forum! The problem with Qt3D is that the API has only recently become stable and there is not much documentation yet. So I guess only a few people are using it right now and that's why it's hard to get help. Also the module has only very few developers, it's more or less only Sean.

-

Its very frustrating but it seems the only way to get any help with Qt3d is to pay KDAB for a support contract... is no one out there working on VR with Qt3d ??

Qt3d seems like it has so much promise but if the guys behind it are selling support and wont help then how can we help to make it better or share what we are doing with others ourselves?

We really are stuck with our Oculus SDK implementation and would be really greatful if anyone can help us to get it working... especially since we are OSS as well here...

-

So far the only help we have been able to get has been in the form of a email slamming us for complaining about a lack of help - and then a quote for support services that cost $150 GBP per hour... really thought Qt and Qt3d were OSS its a bit of a shock.

We are trying to rebuild jahshaka using a pure QT pipeline. - it has had over 3 million downloads and still gets over 1000 downloads a day despite it having stalled out a few years ago. It was built using Qt so we are just trying to stay true to Qt and the OSS philosophy and hoped to promote qt3d along side our work as we restart the project.

But we cant really do that for $150 GBP a hour...

we were told there is only one developer working on Qt3d - Sean - so offered to have our lead developer help him but that has been turned down, we also offered to send them or oculus DK2 for them to test with since they said they dont have one but that has been turned down as well...

Looking for some help from the community or direction as we cant really build a QT based OSS Vr authoring toolkit without any VR support while other frameworks are so far along.

-

I'm not too familiar with Qt3D, but I've been brushing up on it. It looks like most of what you'd need is already built into the project. It supports rendering to an offscreen surface. It supports rendering a single framegraph to multiple viewports from different camera angles (there examples of both in the examples folder).

So what's needed is a mechanism to do some basic initialization of the Oculus SDK, an means of using the SDK as an input aspect in order to allow the head pose of the HMD to drive the camera position(s) and a means of detecting the rendered frame completion and taking the resulting image in the FBO and pushing it to the Oculus SDK (perhaps something extending

QAbstractFrameAdvanceService?).To do it quickly and easily you'd need familiarity with both the Oculus SDK and the internals of Qt3D and since the latter apparently is just one person who is disinterested in the Oculus SDK, anyone who does this essentially promising to dive into a bunch of source code they aren't familiar with, that isn't well documented, and that may not be completely stabilized, and start tinkering with it to determine the best way in which to integrate VR functionality. If they're smart, they'll be looking to do it in a way that is agnostic about the actual HMD type so that you could support either Vive or Oculus. Diving into someone else's undocumented code isn't trivial, so it's not surprising that it would end up being pricey.

If the examples for Qt3D included some kind of demonstration of using an offscreen surface and a framebuffer to capture the output, it would make the work significantly easier. Since that would have broader utility than specifically Oculus support, perhaps you should pester Sean to do something along those lines.

-

Thanks Brad for the reply - very helpful :) the Qt 5.7 release is just a few months off so may be better for us to wait for that and then try to get some help with making this happen, since as #kshegunov mentioned the qt3d api should be locked down by then and in better shape for tinkering.

like you said It would really be great if we can get some examples of offscreen rendering etc but doesnt seem to be happening short of paying out mad money so we will just focus on cleaning up our code in the mean time so we can get it out there as well. Its just hard to code in 3d with the VR kit sitting down next to us and not able to use it :) as it really is mind blowing stuff

i also totally agree - VR support should be abstracted so it can work with Oculus and Vive and others - we are trying to get a Vive as well now for testing here as well. We would just love to help push qt3d vr support ahead and start to figure out some of the core issues in the mean time and killer features. Rendering widgets inside 3d space would also be wicked cool I think - like google is doing in tiltbrush

-

@Brad-Austin-Davis said:

So what's needed is a mechanism to do some basic initialization of the Oculus SDK, an means of using the SDK as an input aspect in order to allow the head pose of the HMD to drive the camera position(s) and a means of detecting the rendered frame completion and taking the resulting image in the FBO and pushing it to the Oculus SDK (perhaps something extending QAbstractFrameAdvanceService?).

That is a really good idea, but unfortunately there may be no way for me to set the active QAbstractFrameAdvanceService. VSyncFrameAdvanceService Is created and assigned directly to the renderer:

https://github.com/qtproject/qt3d/blob/5.6/src/render/backend/renderer.cpp#L140

https://github.com/qtproject/qt3d/blob/5.6/src/render/backend/renderer_p.h#L230In the QRenderAspect, it is being added directly as a service but the service provider isnt being checked for an already set QAbstractFrameAdvanceService implementation:

https://github.com/qtproject/qt3d/blob/5.6/src/render/frontend/qrenderaspect.cpp#L412Are there any other suggestions to go about doing this without modifying qt3d's source?

Thanks in advance

-

@Brad-Austin-Davis said:

So what's needed is a mechanism to do some basic initialization of the Oculus SDK, an means of using the SDK as an input aspect in order to allow the head pose of the HMD to drive the camera position(s) and a means of detecting the rendered frame completion and taking the resulting image in the FBO and pushing it to the Oculus SDK (perhaps something extending QAbstractFrameAdvanceService?).

That is a really good idea, but unfortunately there may be no way for me to set the active QAbstractFrameAdvanceService. VSyncFrameAdvanceService Is created and assigned directly to the renderer:

https://github.com/qtproject/qt3d/blob/5.6/src/render/backend/renderer.cpp#L140

https://github.com/qtproject/qt3d/blob/5.6/src/render/backend/renderer_p.h#L230In the QRenderAspect, it is being added directly as a service but the service provider isnt being checked for an already set QAbstractFrameAdvanceService implementation:

https://github.com/qtproject/qt3d/blob/5.6/src/render/frontend/qrenderaspect.cpp#L412Are there any other suggestions to go about doing this without modifying qt3d's source?

Thanks in advance

@njbrown said:

Are there any other suggestions to go about doing this without modifying qt3d's source?

While that is obviously not an immediate solution, doing that and proposing it through Gerrit could be the best option long term.

That way seems to have been used quite successfully by KDE developers to extend Qt in ways they needed.

Cheers,

_ -

Hello everyone,

I also wanted to integrate Oculus SDK in qt3d and just started a search if there already have been efforts.

My thoughts so far are:

- make it available for everyone on GitHub as a plugin for everyone to use, not part of another bigger project

- provide a layer to use OpenVR (Vive) or libOVR (Oculus) directly with the same Qml Objects

Maybe the Qt3D Team is more likely to help, if the result is a standalone VR addition to Qt3D? So they would not have to dig into someone's code.

- I wanted to add an Qt3DCore::Entity named "VRManager" or "VRController" which handles

- Initialization,

- creating an texture swap chain as a Qt3DRender::QRenderTarget

- used as a context for all other objects

- Other Objects:

- In OpenVR I saw there is a list of 3D-Objects (controller, lighthouses, ...) which are tracked. One can iterate through all objects. I wanted to subclass "Qt3DRender::QGeometry" to access these objects in qml (I think libOVR will have/has something similar, at least when touch-controllers are out).

- Head pose:

- Headset Camera-Entity with a

- Read Only: Head Pose, Eye Offsets that can be used as input

- Read/Writeable: position, viewCenter, upVector, ... (without applied headPose)

The final Headpose/viewMatrix would not be set by qml, as it is very crucial that this is done just before rendering. The C++ side of VRManager would query libOVR for a predicition of the head pose just before the drawcall. This prediction is then applied to the viewMatrix. Especially this is not a task that can be done in a seperate thread (I think usually all input is handled by the "logic" aspect in another thread).

I was not aware of QAbstractFrameAdvanceService yet... Has anyone tried to subclass the complete QRenderaspect?

Especially when the scene is rendered on the monitor-screen at the same time, it would be best to have a seperate thread (and thus an additional aspect?) for both renderings.I'd love to see VR in Qt3D and would like to be involved in it's development.

Cheers

-

It looks like when "Scene3D" is used inside a QQuickView, Qt3D integrates into the rendering cycle of the QQuickView. Maybe this is the solution to call "ovr_CommitTextureSwapChain" and "ovr_SubmitFrame" at the right time.

This would require a special "QQuickView" for VR that synchronizes to the HMD. This would not be able to do VSync to a Monitor.

I'm still not sure how a proper architecture would look like for this case. With one Qml-Context for the scene shown on both devices, the elements for Monitor and HMD would have to update on different speeds.

Two different qml-engines could not share qml-elements (ids), but could synchronize to the two devices and maybe share a mirror-texture of the HMD. -

@Dabulla you're right, Qt3D uses synchronous rendering when used in a QQuickView, but it did not work in the c++ version the last time I tried. The official version of Qt3D has come out since, so i'll have to try again and tell you guys if it works or not. It's probably the best option for getting vr working right now because you can definitely know when a frame starts and when it ends.

Another hackish option is to use a QFrameAction component to submit and swap the render textures each frame....but that'll only work if QFrameAction runs its callback on the same thread as the renderer. I'll have to investigate this further.

Also, subclassing the QRenderAspect wouldnt do much since the actual rendering takes place in another class called Renderer which isnt publicly accessible. So that option wouldnt work.

-

It looks like when "Scene3D" is used inside a QQuickView, Qt3D integrates into the rendering cycle of the QQuickView. Maybe this is the solution to call "ovr_CommitTextureSwapChain" and "ovr_SubmitFrame" at the right time.

This would require a special "QQuickView" for VR that synchronizes to the HMD. This would not be able to do VSync to a Monitor.

I'm still not sure how a proper architecture would look like for this case. With one Qml-Context for the scene shown on both devices, the elements for Monitor and HMD would have to update on different speeds.

Two different qml-engines could not share qml-elements (ids), but could synchronize to the two devices and maybe share a mirror-texture of the HMD.@Dabulla nick - njbrown - is working on a new 3d framework built on top of qt and qopengl that will have native support for the occulus so hit him up, it would be great if you can hep out! Will probably call it Qt3DC (for community edition since Qt3D seems to be enterprise only)

-

@Dabulla nick - njbrown - is working on a new 3d framework built on top of qt and qopengl that will have native support for the occulus so hit him up, it would be great if you can hep out! Will probably call it Qt3DC (for community edition since Qt3D seems to be enterprise only)

@jahshaka

Qt3D isn't enterprise only, it's all part of Qt.

KDAB just have been contributing most to it, and their priorities might not match yours (different kind of target applications bring up different kind of bugs).

So it would probably make more sense to align development efforts in one place and not build another module that for the most part duplicates work. Especially since you would know what to fix, getting patches in is not overly difficult. -

@Dabulla nick - njbrown - is working on a new 3d framework built on top of qt and qopengl that will have native support for the occulus so hit him up, it would be great if you can hep out! Will probably call it Qt3DC (for community edition since Qt3D seems to be enterprise only)

Actually @tekojo's wrong (wink), Qt3D desperately needs better docs for the features it has. So it's not even required you to learn its internals, just contributing know-how would greatly improve the module and your understanding of it. I myself have asked on several occasions the lead dev on the mailing how things are done and he's been responsive and understanding. I think you went the wrong way about it if you had expected him/them to give you code/help with your release - that'd require time and time costs are rather steep in our tidy little field. Instead I'd focus on seeking knowledge about the module - how things are done and putting examples/docs with that new knowledge would be very, very much welcome. I'm quite convinced Sean would jump at the occasion of someone helping with the docs (it's one of the reasons why Qt3D is opensource).

-

Actually @tekojo's wrong (wink), Qt3D desperately needs better docs for the features it has. So it's not even required you to learn its internals, just contributing know-how would greatly improve the module and your understanding of it. I myself have asked on several occasions the lead dev on the mailing how things are done and he's been responsive and understanding. I think you went the wrong way about it if you had expected him/them to give you code/help with your release - that'd require time and time costs are rather steep in our tidy little field. Instead I'd focus on seeking knowledge about the module - how things are done and putting examples/docs with that new knowledge would be very, very much welcome. I'm quite convinced Sean would jump at the occasion of someone helping with the docs (it's one of the reasons why Qt3D is opensource).

@kshegunov we have tried to get help many times and received quotes for paid services on two occasions and zero help whatsoever - not even answers to basic questions. this is totally so un open source its not funny.

Sean has has not answered any of our posts here or on the mailing list thats for sure... and others have the same issue

we have already started on our engine (and it already has oculus support!!) and since its built on QOpenGL it should be easy for others to use and get involved... we hope a community built and supported engine will find a better home and better support

as soon as we have something worthy of publishing we will post a link to our github

-

@kshegunov we have tried to get help many times and received quotes for paid services on two occasions and zero help whatsoever - not even answers to basic questions. this is totally so un open source its not funny.

Sean has has not answered any of our posts here or on the mailing list thats for sure... and others have the same issue

we have already started on our engine (and it already has oculus support!!) and since its built on QOpenGL it should be easy for others to use and get involved... we hope a community built and supported engine will find a better home and better support

as soon as we have something worthy of publishing we will post a link to our github

@jahshaka said in Qt3d + Oculus SDK:

Sean has has not answered any of our posts here or on the mailing list thats for sure... and others have the same issue

I'm far away from that particular project, much less I want to appear as trying to defend him/kdab. I just want the record to reflect that this is a user forum, devs don't frequent it. As for the mailing list, I see a single question (6th of May) posted, which in all fairness indeed did not get any attention.

Good luck with your engine! I will follow its development with great interest, although I haven't any work with 3D or graphics.

Kind regards.